The multilingual embedding model represents a state-of-the-art solution designed to produce embeddings tailored explicitly for chat responses. By aligning paragraph embeddings, it ensures that the resulting replies are not only contextually relevant but also coherent. This is achieved through leveraging the advanced capabilities of the BAAI/bge-m3 model, widely recognized for its efficiency and accuracy. Importantly, the model operates seamlessly within a 500-word limit, ensuring that responses are succinct and maintain focus on the conversation at hand. The model can be accessed via Hugging Face at – https://huggingface.co/BAAI/bge-m3

For optimal performance, deploying this model on an EC2 instance equipped with a GPU server on AWS is highly recommended. GPUs are specialized hardware components well-suited for handling parallel processing tasks, particularly those involved in machine learning and deep learning workflows like the one executed by the embedding model. Utilizing a GPU server allows the model to take full advantage of the parallel processing capabilities, resulting in significantly faster response times compared to running on a CPU server. While it is feasible to run the model on a CPU server, doing so may lead to considerably prolonged response times due to the lack of specialized hardware acceleration.

Moreover, this solution offers seamless implementation through the utilization of FASTAPI and Docker containerization. FASTAPI is a modern web framework for building APIs with Python that provides high performance and easy integration with other tools and services. By leveraging FASTAPI, developers can quickly and efficiently create endpoints for deploying the embedding model, facilitating its integration into existing systems or applications. Additionally, packaging the model within a Docker container streamlines deployment and management processes, ensuring consistency and portability across different environments.

Step by Step Procedure to Install and Configure the embedding model on EC2

Prerequisites

Ubuntu OS

Hardware requirements:

1 x NVIDIA T4 GPU with 16 GB GPU memory

8 vCPU

32 GB RAM

Disk space – 100GB

Embedding Model Server Installation

1. Set Up Your AWS Infrastructure Using EC2

Navigate to the EC2 service on Amazon Web Services (AWS) and click on launch instance.

2. Select the Right AMI Image

Choose a name for your instance and choose the Ubuntu AMI. Under Ubuntu, pick the Deep Learning AMI GPU PyTorch 2.0.1 (Ubuntu 20.04) 20230926 image, which comes with Ubuntu, Nvidia drivers, Cuda, and PyTorch already included.

3. Configure Your Instance Type

Ensure optimal performance by selecting the g4dn.2xlarge instance type.

4. Deploy your EC2

Deploy your EC2 instance by clicking on the “Launch Instance” button.

5. Login into Putty server

For logging into the Putty server, the following details are required:

- Server IP: [insert server IP here]

- Auto-login username: [insert auto-login username here]

- Key for login to server: [insert key for login to server here]

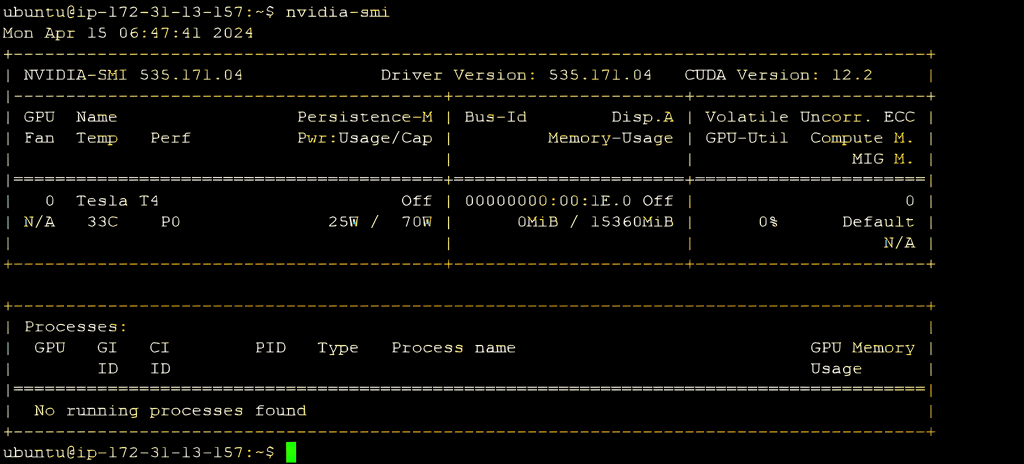

6. Check NVIDIA Configuration

Verify proper GPU resource allocation by inspecting the NVIDIA configuration with the command nvidia-smi.

7. Manually Install FlagEmbedding

Prepare your environment for the multilingual embedding model by manually installing FlagEmbedding using the command

“pip install -U FlagEmbedding”

8. Prepare Python File

Set up a Python file containing the necessary code for interacting with the BAAI/bge-m3 embedding model, including library imports and text definition for embedding generation.

from FlagEmbedding import BGEM3FlagModel

model = BGEM3FlagModel(‘BAAI/bge-m3’, use_fp16=True)

# Setting use_fp16 to True speeds up computation with a slight performance degradation

text = “What is BGE M3?”

embedding = model.encode(text, batch_size=12, max_length=524,)[‘dense_vecs’]

# If you don’t need such a long length, you can set a smaller value to speed up the encoding process.

print(embedding.tolist())

9. Run the Python File

Execute the code with the command python3 your_file_name.py to generate embeddings.

In conclusion, the multilingual embedding model can be seamlessly accessed through a REST API by configuring an endpoint for inputting text within the request body. Once the API is set up, containerization via a Dockerfile simplifies deployment, ensuring smooth access to the model. By creating an image and launching a container on the server, Docker eliminates the need for individual installations, enhancing performance, scalability, and ease of deployment. This streamlined approach not only optimizes communication but also fosters more efficient and effective interactions within chat-based applications.