Recently, Apache announced the release of Airflow 2.3.0. Since its last update which is Apache Airflow 2.2.0, this new release has over 700 commits, including 50 new features, 99 improvements, 85 bug fixes, and several doc changes.

Here is a glimpse of major updates:

- Dynamic Task Mapping(AIP-42)

- Grid View Replaces Tree View

- Purge History From the Metadata Database

- LocalKubernetesExecutor

- DagProcessorManager as standalone process (AIP-43)

- JSON Serialization for Connections

- Airflow db downgrade and Offline Generation of SQL Scripts

- Reuse of Decorated Tasks

Let’s discuss these updates in detail

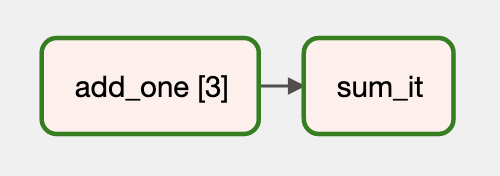

Dynamic Task Mapping(AIP-42)

Dynamic Task Mapping provides a way for the workflow to create a number of tasks at runtime based on current data, instead of the DAG author having to know in advance how many tasks would be required.

This is similar to defining tasks in a for loop. Instead of having the DAG file fetch the data and do that itself, a scheduler can do this based on the output of a previous task. Right before the mapped task is executed the scheduler will create n copies of the task, one for each input.

It is also possible to have the task operate on a collected output of the mapped task, and it is commonly known as a map and reduce.

The airflow now provides full support for dynamic tasks. This refers to the fact that the tasks can be generated dynamically at runtime. It is similar to the working of for loop, i.e. can be used to create a list of tasks, you can make the same task without knowing the exact number of tasks ahead of time. Suppose you can have a task that generates a list to iterate over without the possibilities of the for-loop.

Find the below example for this:

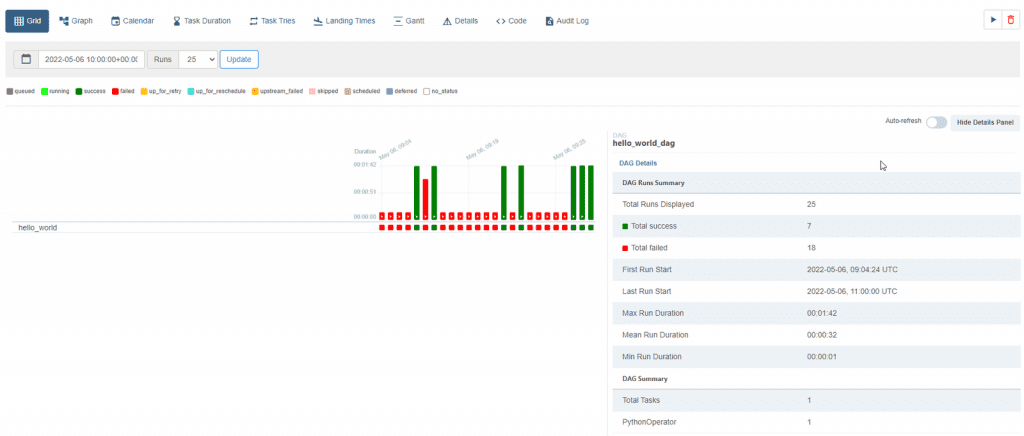

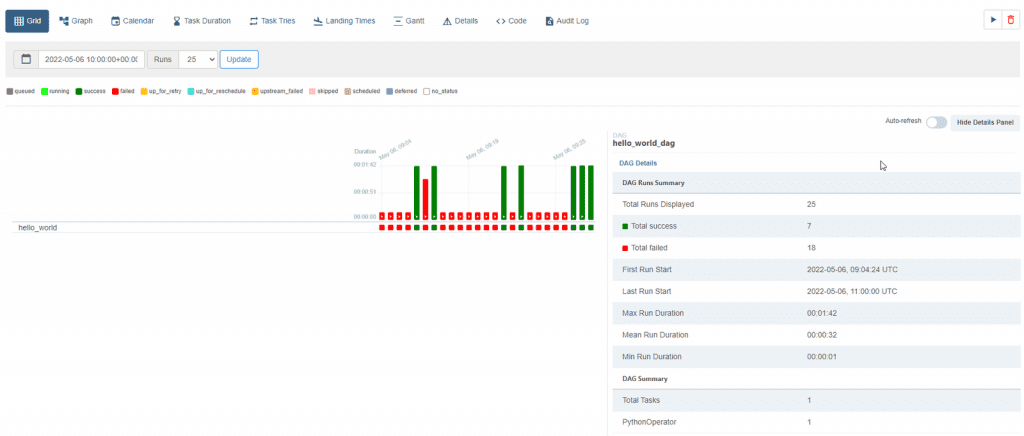

Grid View replaces Tree View.

Find the screenshots below showing the replacement of Tree view to Grid view in Airflow 2.3.0.

Screenshots:

To Get Rid of History from the Metadata Database

A new airflow DB clean command is used to get rid of old data from the metadata database.

This command can use to reduce the size of the metadata database.

Here is some more information: Purge history from metadata database

LocalKubernetesExecutor

Airflow 2.3.0 introduced a new executor named LocalKubernetesExecutor, which helps you run some of the tasks using LocalExecutor and run another set of functions using the KubernetesExecutor in the same deployment based on the task’s queue.

Here is some more information: LocalKubernetesExecutor

DagProcessorManager as standalone process (AIP-43)

In Airflow 2.3.0, the DagProcessorManager can be run as a standalone process. As DagProcessorManager runs user code, It is better to separate it from the scheduler process and run it as an independent process in a different host.

In airflow 2.3.0, the dag-processor CLI command will start a new process to run the DagProcessorManager in a separate process. Before the DagProcessorManager can run as a standalone process, it is necessary to set the [scheduler] standalone_dag_processor to True.

Here is some more information: dag-processor CLI command

A place for big ideas.

Reimagine organizational performance while delivering a delightful experience through optimized operations.

JSON Serialization for the Connections.

The connections can be created by using the json serialization format.

JSON serialization format can also be used when setting connections in the environment variables.

Here is some more information: JSON serialization for connections

Airflow DB downgrade and Offline generation of the SQL scripts

A new command airflow DB downgrade in Airflow 2.3.0 will be used for your chosen version by downgrading the database.

The downgrade/upgrade SQL scripts can also be generated for your database and also run it against your database manually or just view the SQL queries, which would be run by the downgrade/upgrade command.

Here is some more information: Airflow DB downgrade and Offline generation of SQL scripts

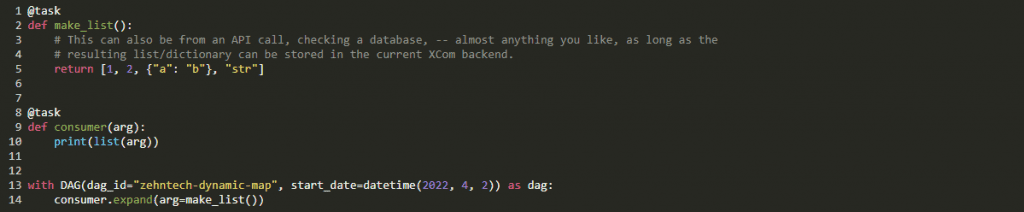

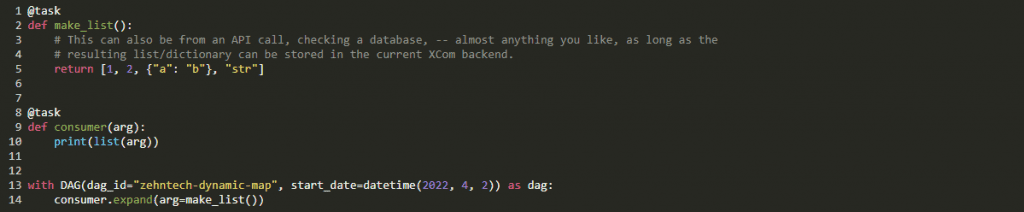

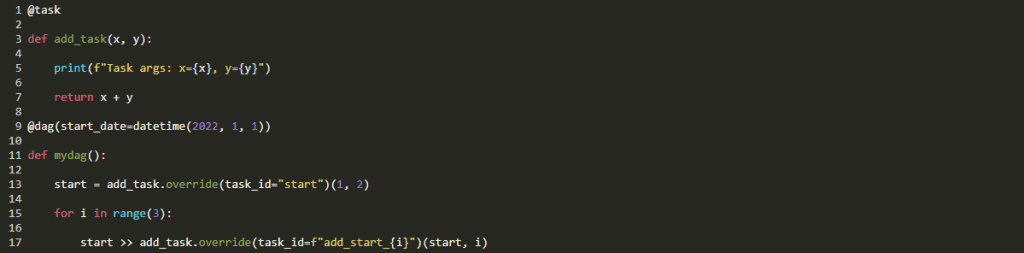

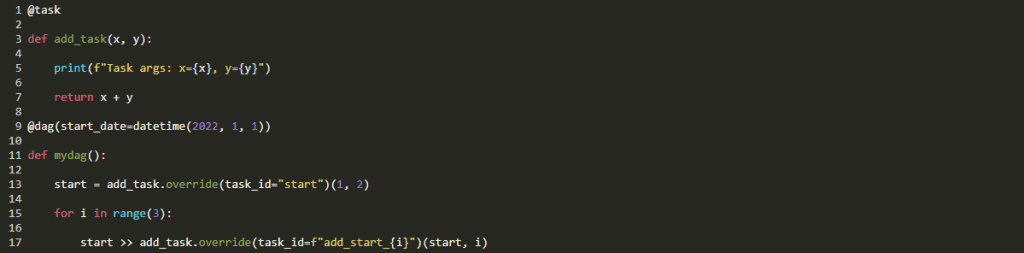

Reuse of decorated tasks

The decorated tasks can be reused across the dag files. A decorated task has an override method that allows you to override its arguments.

Here’s an example:

Other small features

It is not a full-scale list, but some noteworthy or interesting small features include:

It Supports different timeout values for dag file analyzing.

The airflow dags reserialize command to reserialize dags.

The Events Timetable.

SmoothOperator – The Operator that does literally nothing except logging a YouTube link to Sade’s “Smooth Operator”. Enjoy!