Web Scraping is the process of fetching useful data from the website. This also plays an important role in data analysis and competitive analysis. In Python it is easy to automate the process of data collecting using web scraping.

In machine learning for training the model, there is a need to prepare the dataset. Therefore, collecting the data is quite time-consuming. But using the Python library to scrape the data from multiple websites reduces the development process. So Extracting data is simple and saves lots of time for developers. Also, data can be stored in databases for future use and analysis. Especially for data scientists who work around large and diverse datasets.

Web scraping provides insight and growth in e-commerce platforms. It plays a vital role in business to making better decisions. Further, it provides a market view based on patterns and trends in data.

In e-commerce, web scraping helps in gathering information about multiple sellers. These are the ones selling their product under the same category but at different prices, names and titles.

Benefits of Using Python for Data Scrapping

Libraries

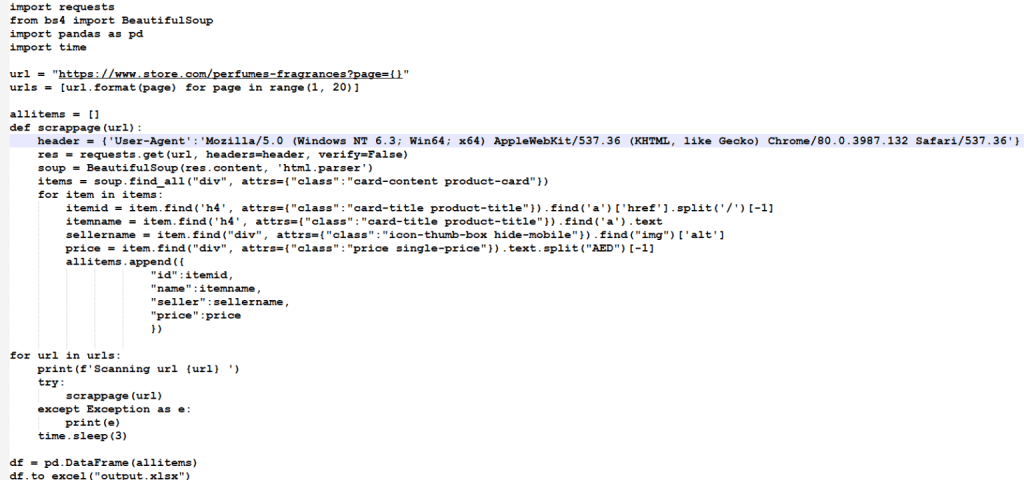

Python being famous for its various libraries which provide ability to achieve task in various fields. For data extraction from website and API, python has various libraries. These includes BeautifulSoup, Selenium, requests, lxml , Scrapy and also provide libraries for data analysis such as pandas and numpy.

Easy to use

There is no need to use curly braces or semi colon, which makes python code easy to read and understand. Perform web scrapping with minimum line of code and minimum efforts.

Code Debugging –

Python executes code one line at a time. This makes debugging easy and less complicated, as it stops the execution once it found any error in any of the lines.

A place for big ideas.

Reimagine organizational performance while delivering a delightful experience through optimized operations.

Environment Setup

Virtual environment is used to create isolated environment installs the packages required for the project. For creating virtual environment there is command in python. This create separate folder in current working directory in which packages are installed required for project.

Steps to create virtual environment on windows

Step 1: Create virtual environment using command:

python -m venv venv

Step 2: Activate virtual environment:

venv\Scripts\activate

Step 3: Install packages:

pip install package_name

Web Data Scrapping with Python

Package Description

- Requests – Request library used for making HTTP request from any website using GET method to get the information.

- BeautifulSoup – Beautifulsoup library pull out data from HTML by inspecting the website. It works with parser to provide way to search data from parser tree.

- Pandas – Pandas is used for data analysis and data cleaning, it is most commonly python library to be use in the field of data science. It deals with various data structure and method for data manipulation.

Steps and code to start scrapping in python

- First of all, need to create and activate the virtual environment using command mentioned above then install the required packages for scrapping.

- Create python file to write the code for scrapping website.

To sum up, there are multiple requirements of fetching data and with python you can easily automate the process. With reduced development, Python ensures time saving and simplicity in the process. Keep reading for more such amazing tech related knowledge.